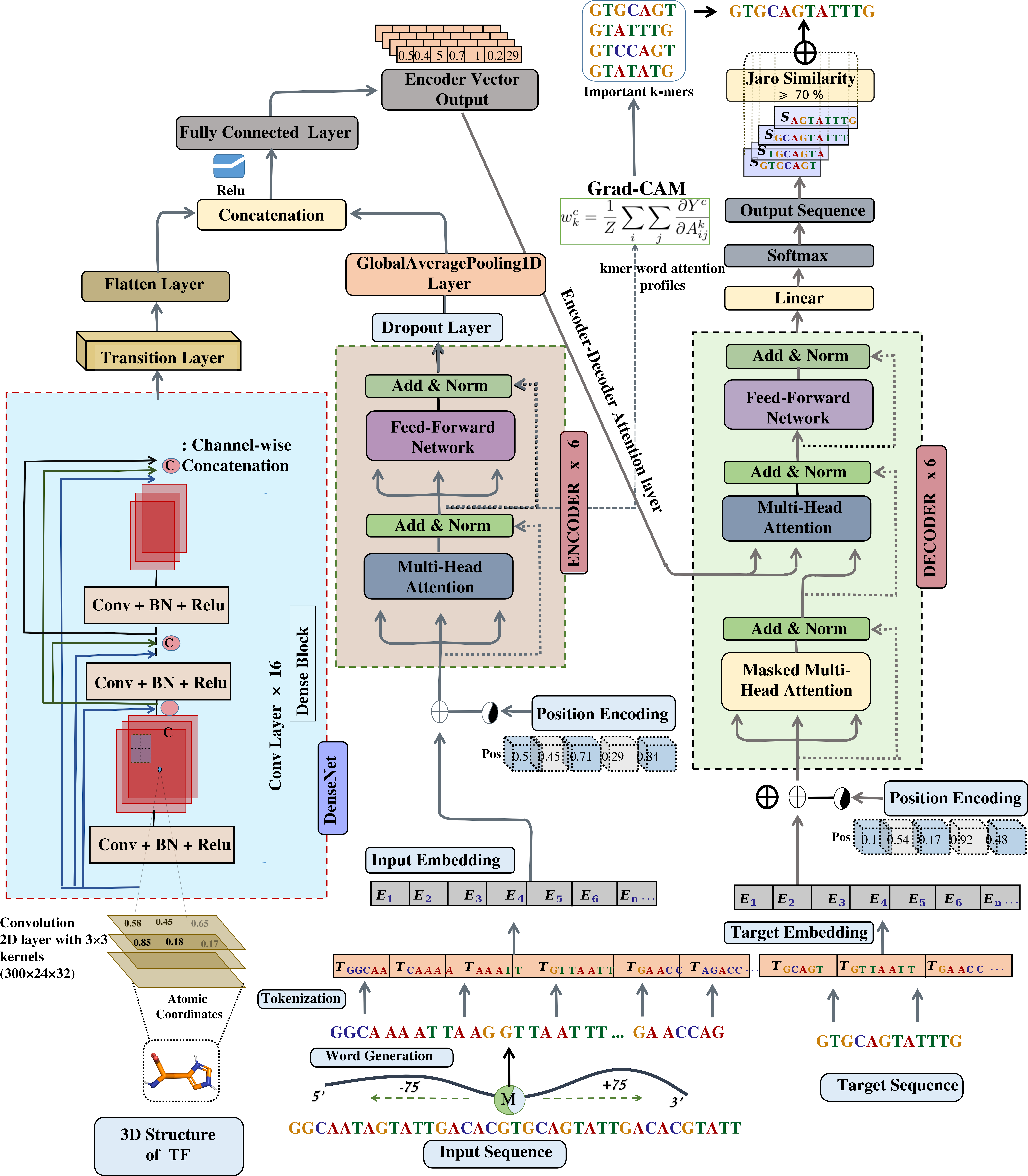

Figure: The PTF-Vāc Deep Co-learning system. Bi-modal deep-learning by Transformers-DenseNet Encoder:Decoder system was implemented to detect TFBS in TF binding regions derived from PTFSpot. The encoder processes binding region sequences represented in penta, hexa, and heptamer word representations, while the decoder receives the binding site information for the corresponding binding region. Six layers encoder:decoder pairs were incorporated. Simultaneously, a DenseNet consisting of 121 layers accepts input in the form of the associated TF's structure. The learned representations from the encoder and DenseNet are merged and passed to the encoder-decoder multi-head attention layer, which also receives input from decoder layers. Subsequently, the resulting output undergoes conditional probability estimation, playing a pivotal role in the decoding process. Following layer normalization, the model proceeds to calculate the conditional probability distribution over the vocabulary for the next token. Finally, the resultant tokens were converted back into words, representing the binding site for the given sequence.